AT A GLANCE

- AI-Driven Workload Automation: AI, especially Google’s Gemini Gen AI, is changing enterprise automation. It improves efficiency in coding, data retrieval, and orchestration, while reducing manual work.

- Optimizing Large Language Models (LLMs): Gemini 1.5 Pro’s long-context processing eliminates the need for RAG. It lets AI process vast data sets directly. This speeds up and improves AI-powered development.

- Multi-Agent AI Systems for Productivity: Multi-agent systems (e.g., Manager, Developer, QA agents) excel at different tasks. They improve accuracy and efficiency in enterprise workflows, unlike a single AI model.

- Google-Broadcom Strategic AI Partnership: Broadcom’s strong partnership with Google Cloud boosts AI skills in various fields. It offers advanced automation, smart workload management, and smooth cloud services for businesses.

FULL TEXT

The Google-led demo of Gemini Gen AI at Broadcom’s Automation Virtual Summit made me glad the partnership between Broadcom and Google goes so deep.

AI will transform Workload Automation. You’re gonna wanna see this.

Or at least parts of it.

The best moments are at 2:30 and 14:20.

Today, we’re diving into Jake Chen’s presentation on how take generative AI from mere prompts to full-fledged production in the enterprise world.

We’ll walk through the typical steps that companies like Broadcom are taking to develop AI-driven applications, all while using Vertex AI, Google Cloud’s all-in-one platform for AI and machine learning.

How to Transition Generative AI from Ideas to Enterprise Reality - Use Ready-Made Solutions

Chen kicked things off with a step you don’t want to miss: not wasting your time.

Before you start crafting your very own Large Language Model (LLM), take a moment to see if something already exists that can do the job.

There are plenty of tried-and-true solutions out there – think translation, transcription, and text-to-speech services.

Sure, multi-modal LLMs can handle these tasks, but don’t reinvent the wheel when robust options like Contact Center AI are already built on top of LLMs.

Building LLM Applications: From Prompts to Power

The first real step? Prompt engineering.

We all know the drill: you ask a question, and if the LLM has been trained on the right data, it spits out a response.

But here’s the catch: for LLMs to tackle new and mysterious cases, they first need to be introduced to the information. The most common way to do this is through something called “retrieval augmented generation” or RAG – think of it as the LLM’s way of Googling for answers.

However, with the arrival of long-context understanding features, like those in Gemini 1.5 Pro, you can simply drop a ton of information right into the prompt, skip RAG, and still get what you want.

Revolutionize Developers' Approach Coding Challenges

Chen showcased the long-context capabilities of Google’s Gemini 1.5 Pro by asking the model to sift through a hefty 800,000 tokens of code from three.js examples to help users learn about character animation.

The model extracted relevant examples from the pile of content, identified demo controls, and customized code –

For example, adding a slider to manipulate animation speed. The model even modified terrain and tweaked text in 3D demos.

You really might want to watch it modify code here at 2:30. Gave me a thrill.

Related: Learn more at deepmind.google/gemini

Turning Chaos into Clarity: AI in Developent

In the whirlwind of Broadcom projects, where documentation, specifications, and a mountain of examples can feel overwhelming, AI can come to the rescue.

By feeding their context into the prompt, developers can simplify the entire process.

It’s like having a super-smart assistant who takes a messy desk and magically organizes it into neat, actionable insights. Suddenly, what once felt like deciphering hieroglyphics becomes straightforward.

Let’s face it, when your AI can handle the heavy lifting, you can focus on the fun stuff – like wondering why you didn’t invest in Tesla sooner.

Enhancing LLM Performance: Key Strategies

To boost the performance of Large Language Models (LLMs), Chen suggests these strategies:

- Provide Clear Instructions: Make your prompts specific to guide the model effectively.

- Assign a Role: Set a context for the model by assigning it a specific role or persona.

- Use System Instructions: Outline how to achieve goals, including defining output formats and rules.

- Include Examples: Show the model what a good response looks like by including relevant examples.

- Add Contextual Information: Provide necessary background details to help the model solve the problem.

- Structure Your Prompts: Organize your prompts with headers or tags to enhance clarity.

- Explain Reasoning: Ask the model to articulate its thought process, which can improve accuracy.

- Break Down Complex Tasks: Simplify large tasks into manageable steps.

- Experiment with Parameters: Adjust parameter values to find optimal settings for performance.

LLM Grounding: When Your Data Exceeds the Context Window Allowance

When your context exceeds the 2,000,000 token limit, grounding your Large Language Model (LLM) becomes essential. The go-to method for grounding large datasets is through Retrieval-Augmented Generation (RAG).

Building a RAG pipeline involves parsing, chunking, embedding, and loading data into a vector database. It’s just way more work than it’s worth especially when there’s the option of Vertex AI Search.

Vertex AI Search a managed service that simplifies this entire process.

Broadcom teams are already leveraging Vertex AI search because it allows us to skip building our own RAG pipeline.

Alternatively, we can ground an LLM using Google search results.

While this method may not utilize your organization’s specific data, it helps reduce hallucinations, and it allows the model to retrieve up-to-date information, such as news and upcoming events.

Understanding AI Agents and Multi-Agentic Orchestration

In Step 3, Chen moved into agentic and multi-agentic orchestrations.

As you know, AI agents are autonomous applications that come with a specific role or persona and are equipped with a set of tools to achieve their assigned goals.

Three Key Components of an AI Agent:

- The Model: This includes advanced versions like Gemini 1.5 Pro.

- The Tools: These consist of APIs, functions, databases, and even other agents, enabling multi-agent orchestration.

- Orchestration: This involves the behind-the-scenes coordination of profile goals, instructions, memory management, reasoning, and planning.

For those eager to create their first agentic applications, we highly recommend using Vertex AI Agent Builder, which offers a ‘no-to-low code’ solution for developing these applications.

Related: Broadcom plans to go AGENT-LESS for Automic and AutoSys Workload Automation.

Multi-Agent Orchestration: Teamwork in AI

Now we dive into the concept of multi-agent orchestration, where we think of a team of individual agents, each with distinct roles and tools.

Imagine a manager agent that assigns tasks to a developer agent for coding and a QA agent for testing. This division of labor means that each agent can focus on what they do best.

By breaking tasks down into smaller components, LLMs can excel at completing these individual tasks rather than getting overwhelmed by a long list of complex requirements in a single prompt.

Studies even show that a multi-agent approach using simpler models can outperform a single, advanced LLM.

Additionally, LLMs can tap into functions and APIs to enhance their capabilities. For instance, if someone asks where to catch a movie in Mountain View, the model uses its tools to create a structured data request, translating natural language into actionable queries.

The Future of AI: Project Astra Unveiled

Chen ended with a sneak peek into the future of AI with Google’s Project Astra.

This innovative initiative showcases the potential of AI assistants that communicate in real-time using sounds, videos, and images –

Gemini demonstrated its prowess by identifying sounds, answering questions about code, and even locating a lost item it had noticed while it was walked around the room. It’s like having a personal assistant who can also play detective.

What’s really exciting is the promise of personalized generative AI applications, where each experience can be tailored based on a users interactions.

Check out the 14:20 mark in the video.

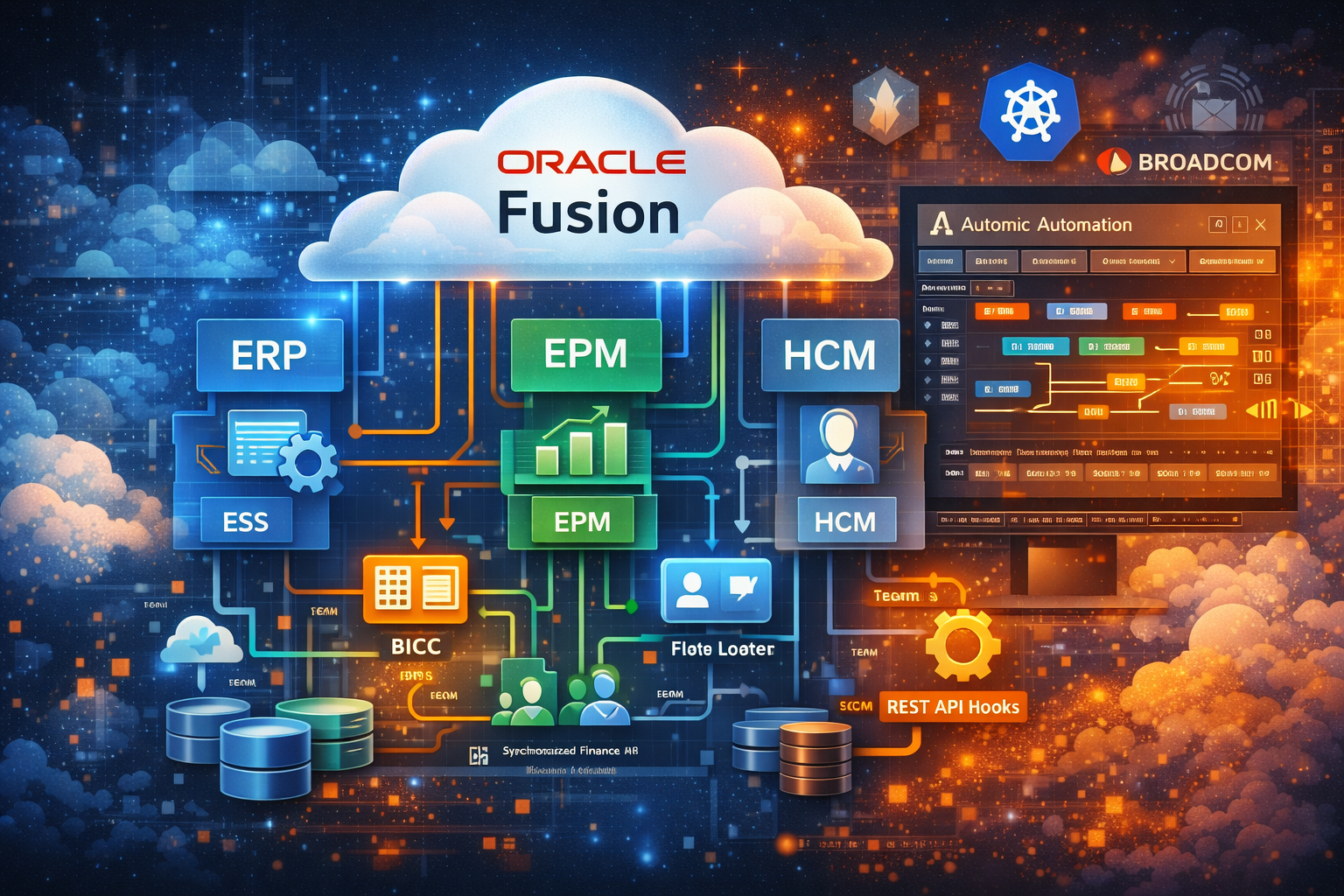

The Dynamic Partnership Between Google and Broadcom

And let’s not forget the dynamic partnership between Google and Broadcom, who both leverage shared technologies to enhance cloud services. Their collaboration is a win-win for all of us.

Here are some key highlights of their partnership:

- Google is a Major Customer: Google relies heavily on Broadcom semiconductors to power its products.

- Long-Term Commitment: Broadcom has a steadfast commitment to Google Cloud, ensuring robust service and support.

- Executive Relationships: Both companies maintain executive relationships at the highest level, fostering strategic alignment.

- Mutual Technology Use: Broadcom integrates Google technology into its own offerings, enhancing product capabilities.

- Cross-Utilization of Technologies: Google also incorporates Broadcom technology into its offerings, showcasing a collaborative approach.

- Products on Google’s Marketplace: Broadcom lists several products on Google’s marketplace, including Automic SaaS, making them easily accessible.

- GCVE Offering: Google provides GCVE (Google Cloud VMware Engine), which is owned by Broadcom, further integrating their services.

- Broadcom’s Google Subscriptions: In addition to traditional cloud services, Broadcom subscribes to several Google products, such as Workspace, Chronicle, and Mandiant.

RMT: Broadcom's #1 Automation Partner

RMT proudly stands as Broadcom’s #1 Automation partner, a title we’ve held since Broadcom acquired CA Technologies. Not only that, but we also earned the distinction of being Broadcom’s GEO Partner of the Year, making us the top partner among all partners!

If you have any questions about Workload Automation, don’t hesitate to reach out to my team.

Say Bob sent you.