AT A GLANCE

- Flexible LLM Connectivity Enables Rapid Deployment: Companies configure Automic AI Services to link with Gemini in SaaS, an on-premise Llama model, or a private cloud LLM. A simple properties file and one system setting establish the connection.

- Analyze Function Delivers Instant Root-Cause Insight: Automic AI converts cryptic JCL, Windows, and SQL errors into plain language. It identifies the failure, offers reasons, and outlines clear remediation steps.

- ASK_AI Generates Production-Ready Code and Fixes: One script call creates PowerShell, SQL, or Python snippets on demand. The same function can append resolution guidance to alerts, cutting mean time to repair.

- Natural-Language Requests Drive End-to-End Workflows: Business users ask Slack or Teams to refresh recent orders. Automic then builds SQL, runs Python, loads BigQuery, and emails confirmation without manual scripting.

I just tuned into Dave Kellermanns’ session, “What AI Does These Days Inside Automic.”

The topic? How Automic hooks to any LLM, where that model can live, and why it matters to users.

Dave promised to show four things – connection steps, model options, user benefits, and direct AI usage.

Curious how it plays out?

Stay with me.

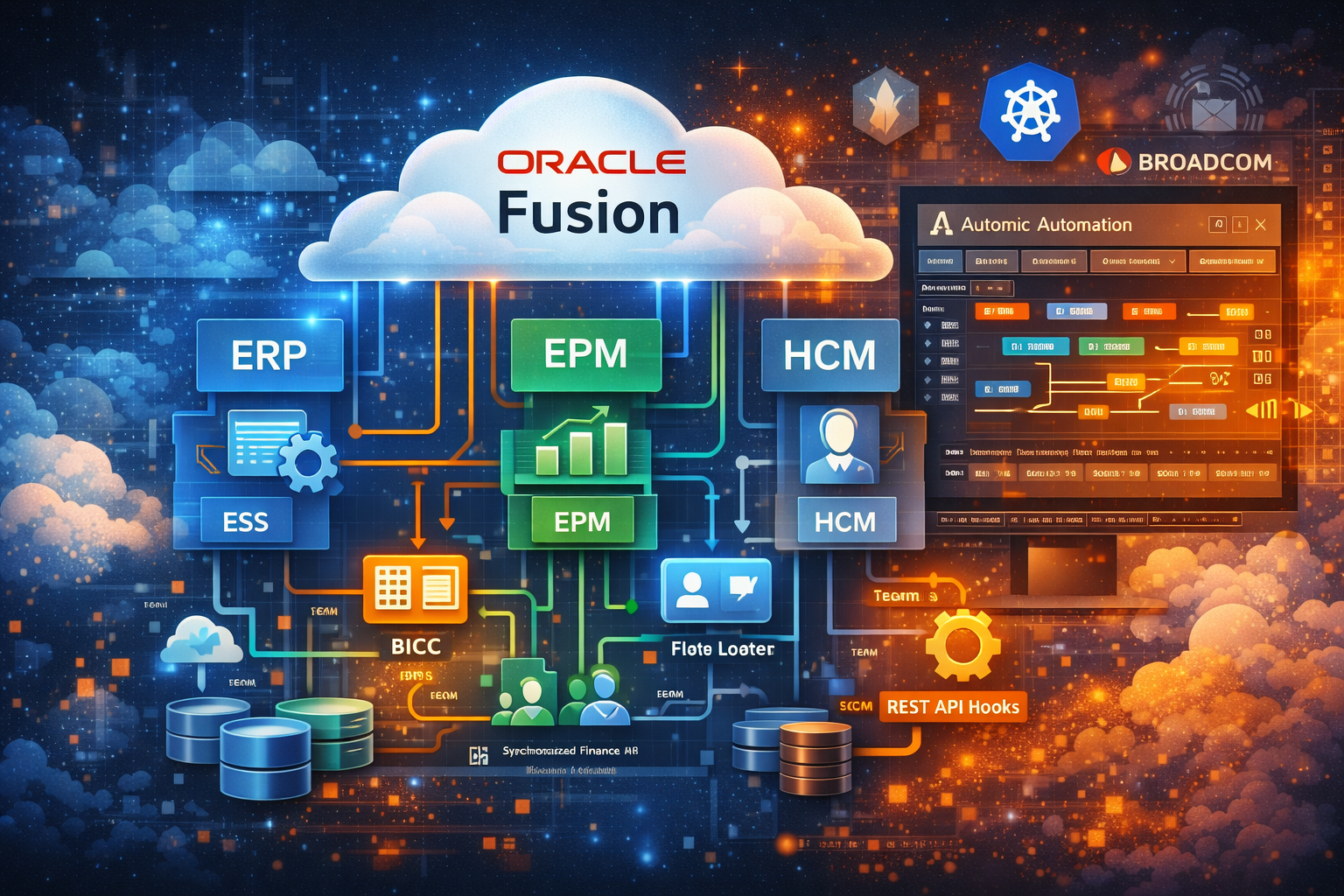

Zero-Friction Setup: Point to Any LLM, Anywhere

First, install Automation AI Services and open its properties file. Set the port at the top, choose your large language model, and save.

Then log in to client 0, head to UC_SYSTEM_SETTINGS, and add AUTOMATION_AI_ENDPOINT with the host and port you just defined. Once configured, the service is usable.

From there, pick where the model lives. Automic SaaS arrives preconfigured with Gemini.

Need everything on-prem? Aim the endpoint at a Llama node or any other in-house model. Prefer a private cloud instance behind your firewall? Same steps apply. As Dave put it, “You do have all the options to dictate where the large language model is located.” No public LLMs required.

I like that flexibility. It lets me decide which model fits each security or latency requirement and switch without revisiting the wiring.

In short, connect once, select a model, and start testing AI-powered automation right away.

“Analyze” in Action: Human-Readable Debugging

Dave clicked Analyze on a vintage mainframe job, could’ve been Python or PowerShell, and the LLM replied, “first we allocate a new data set…” straight from his JCL snippet. No jargon, just a clear step-by-step readout I can hand to a junior admin.

Next, he opened a Windows job whose command spelled cuurl. One more right-click, and the report explained the failure, suggested installing curl, checking PATH, and testing the command before updating the Automic job. That’s root-cause plus remediation in a single pane.

He didn’t stop there. A malformed SQL SELECT threw an error in the UI; Dave highlighted the message, hit Analyze, and the model flagged the wrong table name while listing possible issues; case sensitivity, bad connection, or syntax.

It even admitted, “I don’t know the correct table name,” proving it wasn’t tied to Automic’s knowledge base.

ASK_AI: Your On-Demand Code Generator

ASK_AI grabbed my attention. It’s Automic’s script function that lets the LLM write code or guidance inside a running job, no extra tooling.

First, Dave asked it for a PowerShell routine that calculates π. Seconds later, the last report displayed a ready-to-run function, proving ASK_AI can author real code mid-execution.

Next, he wired ASK_AI into a failed Windows job alert. The function parsed the log, spotted the misspelled curl, and suggested fixes: install curl, add it to PATH, retest, update the job.

Finally, a plain-language prompt spawned SQL to pull order data and Python to load BigQuery, all executed in one workflow.

Agentic Workflows: Natural-Language Automation for Business Users

Picture a business user typing, “refresh the last 10 days of orders into the sales dashboard,” into a lightweight chat interface.

Automic forwards that line to the LLM through a REST call, which instantly assembles a brand-new workflow, no prewritten scripts.

First, AI writes SQL to fetch the data.

Next, it generates Python to load BigQuery; finally, it fires an email confirmation. I watched Dave run the demo live: an empty job became a completed run that loaded 11 records and 14 columns into our table.

This shift puts automation in the hands of the people who own the metrics, while Ops still sees every action inside Automic.

Need fresher dashboards and fewer tickets? This path gets you there.

Security & Cost Considerations

Before we talk outcomes, let me anchor what Dave showed and how you set it up.

- SaaS default: “For Automic SaaS it is preconfigured with Gemini.” You can start from that baseline and move on when needed.

- On-prem choice: You can “put it with an on-premise large language model such as Llama or any other language model you have on site.”

- Private cloud option: You can “use it with a cloud hosted AI specifically to your company,” and Dave adds, “no need to use public LLMs.”

- Simple configuration: Set the port and model in the properties file, then in Automic “go to the UC_SYSTEM_SETTINGS” and add AUTOMATION_AI_ENDPOINT to point to the service and port.

Key Takeaways & Next Steps

Dave showed setup, model placement options, and live uses: Analyze explains scripts and errors; ASK_AI generates PowerShell, SQL, Python; a chat prompt builds a workflow and emails completion.

Next, let’s apply the same pattern to your environment. We’ll configure the endpoint, wire your preferred LLM, and pilot the Analyze and ASK_AI flows on your jobs and notifications.

Book a free Automic AI Readiness Check with my team.

Just say Bob offered it to you.

You must be logged in to post a comment.